kubeadm安装k8s1.26高可用集群

k8s环境规划:

- podSubnet(pod网段) 10.244.0.0/16

- serviceSubnet(service网段): 10.96.0.0/12

实验环境规划:

- 操作系统:centos7.9

- 配置: 4Gib内存/4vCPU/60G硬盘

- 网络:NAT模式

K8S集群角色

| IP | 主机名 | 组件 |

|---|---|---|

| 10.168.1.61 | master01 | apiserver、controller-manager、schedule、kubelet、etcd、kube-proxy、容器运行时、calico、keepalived、nginx |

| 10.168.1.62 | master02 | apiserver、controller-manager、schedule、kubelet、etcd、kube-proxy、容器运行时、calico、keepalived、nginx |

| 10.168.1.63 | master03 | apiserver、controller-manager、schedule、kubelet、etcd、kube-proxy、容器运行时、calico、keepalived、nginx |

| 10.168.1.64 | node01 | Kube-proxy、calico、coredns、容器运行时、kubelet |

| 10.168.1.60 | master | VIP |

一. 初始化安装k8s集群的实验环境(所有机器都要进行以下操作)

1.1.1 关闭selinux和防火墙

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/configsetenforce 0systemctl stop firewalld && systemctl disable firewalld

1.1.2 配置host文件

cat <<EOF>>/etc/hosts10.168.1.61 master0110.168.1.62 master0210.168.1.63 master0310.168.1.64 node01EOF

1.1.3 关闭交换分区swap

swapoff -ased -i 's/.*swap/#&/' /etc/fstab

1.1.4 修改机器内核参数

modprobe br_netfilterecho "modprobe br_netfilter" >>/etc/profilecat > /etc/sysctl.d/k8s.conf <<EOFnet.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1net.ipv4.ip_forward = 1EOFsysctl -p /etc/sysctl.d/k8s.conf

1.1.4 配置repo源

sudo sed -e 's|^mirrorlist=|#mirrorlist=|g' \-e 's|^#baseurl=http://mirror.centos.org/centos|baseurl=https://mirrors.tuna.tsinghua.edu.cn/centos|g' \-i.bak \/etc/yum.repos.d/CentOS-*.reposudo yum install -y yum-utils device-mapper-persistent-data lvm2yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.reposudo sed -i 's+download.docker.com+mirrors.tuna.tsinghua.edu.cn/docker-ce+' /etc/yum.repos.d/docker-ce.repocat>/etc/yum.repos.d/kubernetes.repo<<EOF[kubernetes]name=kubernetesbaseurl=https://mirrors.tuna.tsinghua.edu.cn/kubernetes/yum/repos/kubernetes-el7-x86_64enabled=1gpgcheck=0EOFsudo yum makecache fast

1.1.5 配置时间同步

yum install ntpdate -yntpdate cn.pool.ntp.orgecho "*/5 * * * * ntpdate cn.pool.ntp.org"|crontab -

1.1.6 安装基础软件包

yum install -y vim net-tools nfs-utils ipvsadm

1.2、安装containerd服务

1.2.1 安装containerd

yum install containerd.io -ymkdir -p /etc/containerdcontainerd config default > /etc/containerd/config.toml

1.2.2 修改配置文件:

sed -i '/SystemdCgroup/s/false/true/' /etc/containerd/config.tomlsed -i 's#registry.k8s.io/pause:3.6#registry.aliyuncs.com/google_containers/pause:3.7#' /etc/containerd/config.tomlsystemctl enable containerd --now

1.2.3 修改/etc/crictl.yaml文件

cat > /etc/crictl.yaml <<EOFruntime-endpoint: unix:///run/containerd/containerd.sockimage-endpoint: unix:///run/containerd/containerd.socktimeout: 10debug: falseEOFsystemctl restart containerd

1.2.4 配置containerd镜像加速器

sed -i '/config_path/s#""#"/etc/containerd/certs.d"#' /etc/containerd/config.toml|grep config_pathmkdir /etc/containerd/certs.d/docker.io/ -pcat>/etc/containerd/certs.d/docker.io/hosts.toml<<EOF[host."https://vh3bm52y.mirror.aliyuncs.com",host."https://registry.docker-cn.com"]capabilities = ["pull"]EOFsystemctl restart containerd

1.2.5 查看containerd服务是否有报错以及设置容器运行时

systemctl status containerdcrictl config runtime-endpoint /run/containerd/containerd.sock

1.3、安装初始化k8s需要的软件包

yum install kubelet-1.26.3 kubeadm-1.26.3 kubectl-1.26.3 -ysystemctl enable kubelet

1.4 配置IPVS模块

cat>/etc/sysconfig/modules/ipvs.modules<<EOF#!/bin/bashipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack"for kernel_module in ${ipvs_modules}; do/sbin/modinfo -F filename ${kernel_module} > /dev/null 2>&1if [ 0 -eq 0 ]; then/sbin/modprobe ${kernel_module}fidoneEOFchmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep ip_vs

二、配置高可用(以下操作只在master节点)

2.1 通过keepalived+nginx实现k8s apiserver节点高可用

master01:

hostnamectl set-hostname master01 && bash

ssh-keygenssh-copy-id master01ssh-copy-id master02ssh-copy-id master03ssh-copy-id node01

yum install epel-release -yyum install nginx keepalived nginx-mod-stream -y

将以下配置文件写入到/etc/nginx/nginx.conf中

mv /etc/nginx/nginx.conf /etc/nginx/nginx.bakvim /etc/nginx/nginx.conf

user nginx;worker_processes auto;error_log /var/log/nginx/error.log;pid /run/nginx.pid;include /usr/share/nginx/modules/*.conf;events {worker_connections 1024;}# 四层负载均衡,为两台Master apiserver组件提供负载均衡stream {log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';access_log /var/log/nginx/k8s-access.log main;upstream k8s-apiserver {server 10.168.1.61:6443 weight=5 max_fails=3 fail_timeout=30s;server 10.168.1.62:6443 weight=5 max_fails=3 fail_timeout=30s;server 10.168.1.63:6443 weight=5 max_fails=3 fail_timeout=30s;}server {listen 16443; # 由于nginx与master节点复用,这个监听端口不能是6443,否则会冲突proxy_pass k8s-apiserver;}}http {log_format main '$remote_addr - $remote_user [$time_local] "$request" ''$status $body_bytes_sent "$http_referer" ''"$http_user_agent" "$http_x_forwarded_for"';access_log /var/log/nginx/access.log main;sendfile on;tcp_nopush on;tcp_nodelay on;keepalive_timeout 65;types_hash_max_size 2048;include /etc/nginx/mime.types;default_type application/octet-stream;}

mv /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.bakvim /etc/keepalived/keepalived.conf

global_defs {notification_email {acassen@firewall.locfailover@firewall.locsysadmin@firewall.loc}notification_email_from Alexandre.Cassen@firewall.locsmtp_server 127.0.0.1smtp_connect_timeout 30router_id NGINX_MASTER}vrrp_script check_nginx {script "/etc/keepalived/check_nginx.sh"}vrrp_instance VI_1 {state MASTERinterface eth0 # 修改为实际网卡名virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的priority 100 # 优先级,备服务器设置 90advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒authentication {auth_type PASSauth_pass 1111}# 虚拟IPvirtual_ipaddress {10.168.1.60/24}track_script {check_nginx}}

vim /etc/keepalived/check_nginx.sh

#!/bin/bash# 检查nginx进程是否存在if pgrep "nginx" > /dev/nullthenecho "nginx is running"elseecho "nginx is not running"systemctl stop keepalivedfi

启动nginx和keepalived服务

chmod a+x /etc/keepalived/check_nginx.shsystemctl start nginx && systemctl enable nginxsystemctl start keepalived && systemctl enable keepalived

systemctl status nginxsystemctl status keepalived

master02

hostnamectl set-hostname master02 && bash

ssh-keygenssh-copy-id master01ssh-copy-id master02ssh-copy-id master03ssh-copy-id node01

yum install epel-release -yyum install nginx keepalived nginx-mod-stream -y

将以下配置文件写入到/etc/nginx/nginx.conf中

mv /etc/nginx/nginx.conf /etc/nginx/nginx.bakvim /etc/nginx/nginx.conf

user nginx;worker_processes auto;error_log /var/log/nginx/error.log;pid /run/nginx.pid;include /usr/share/nginx/modules/*.conf;events {worker_connections 1024;}# 四层负载均衡,为两台Master apiserver组件提供负载均衡stream {log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';access_log /var/log/nginx/k8s-access.log main;upstream k8s-apiserver {server 10.168.1.61:6443 weight=5 max_fails=3 fail_timeout=30s;server 10.168.1.62:6443 weight=5 max_fails=3 fail_timeout=30s;server 10.168.1.63:6443 weight=5 max_fails=3 fail_timeout=30s;}server {listen 16443; # 由于nginx与master节点复用,这个监听端口不能是6443,否则会冲突proxy_pass k8s-apiserver;}}http {log_format main '$remote_addr - $remote_user [$time_local] "$request" ''$status $body_bytes_sent "$http_referer" ''"$http_user_agent" "$http_x_forwarded_for"';access_log /var/log/nginx/access.log main;sendfile on;tcp_nopush on;tcp_nodelay on;keepalive_timeout 65;types_hash_max_size 2048;include /etc/nginx/mime.types;default_type application/octet-stream;}

mv /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.bakvim /etc/keepalived/keepalived.conf

global_defs {notification_email {acassen@firewall.locfailover@firewall.locsysadmin@firewall.loc}notification_email_from Alexandre.Cassen@firewall.locsmtp_server 127.0.0.1smtp_connect_timeout 30router_id NGINX_MASTER}vrrp_script check_nginx {script "/etc/keepalived/check_nginx.sh"}vrrp_instance VI_1 {state BACKUPinterface eth0 # 修改为实际网卡名virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的priority 90 # 优先级,备服务器设置 90advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒authentication {auth_type PASSauth_pass 1111}# 虚拟IPvirtual_ipaddress {10.168.1.60/24}track_script {check_nginx}}

vim /etc/keepalived/check_nginx.sh

#!/bin/bash# 检查nginx进程是否存在if pgrep "nginx" > /dev/nullthenecho "nginx is running"elseecho "nginx is not running"systemctl stop keepalivedfi

启动nginx和keepalived服务

chmod a+x /etc/keepalived/check_nginx.shsystemctl start nginx && systemctl enable nginxsystemctl start keepalived && systemctl enable keepalived

systemctl status nginxsystemctl status keepalived

master03

hostnamectl set-hostname master02 && bash

ssh-keygenssh-copy-id master01ssh-copy-id master02ssh-copy-id master03ssh-copy-id node01

yum install epel-release -yyum install nginx keepalived nginx-mod-stream -y

将以下配置文件写入到/etc/nginx/nginx.conf中

mv /etc/nginx/nginx.conf /etc/nginx/nginx.bakvim /etc/nginx/nginx.conf

user nginx;worker_processes auto;error_log /var/log/nginx/error.log;pid /run/nginx.pid;include /usr/share/nginx/modules/*.conf;events {worker_connections 1024;}# 四层负载均衡,为两台Master apiserver组件提供负载均衡stream {log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';access_log /var/log/nginx/k8s-access.log main;upstream k8s-apiserver {server 10.168.1.61:6443 weight=5 max_fails=3 fail_timeout=30s;server 10.168.1.62:6443 weight=5 max_fails=3 fail_timeout=30s;server 10.168.1.63:6443 weight=5 max_fails=3 fail_timeout=30s;}server {listen 16443; # 由于nginx与master节点复用,这个监听端口不能是6443,否则会冲突proxy_pass k8s-apiserver;}}http {log_format main '$remote_addr - $remote_user [$time_local] "$request" ''$status $body_bytes_sent "$http_referer" ''"$http_user_agent" "$http_x_forwarded_for"';access_log /var/log/nginx/access.log main;sendfile on;tcp_nopush on;tcp_nodelay on;keepalive_timeout 65;types_hash_max_size 2048;include /etc/nginx/mime.types;default_type application/octet-stream;}

mv /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.bakvim /etc/keepalived/keepalived.conf

global_defs {notification_email {acassen@firewall.locfailover@firewall.locsysadmin@firewall.loc}notification_email_from Alexandre.Cassen@firewall.locsmtp_server 127.0.0.1smtp_connect_timeout 30router_id NGINX_MASTER}vrrp_script check_nginx {script "/etc/keepalived/check_nginx.sh"}vrrp_instance VI_1 {state BACKUPinterface eth0 # 修改为实际网卡名virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的priority 80 # 优先级,备服务器设置 90advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒authentication {auth_type PASSauth_pass 1111}# 虚拟IPvirtual_ipaddress {10.168.1.60/24}track_script {check_nginx}}

vim /etc/keepalived/check_nginx.sh

#!/bin/bash# 检查nginx进程是否存在if pgrep "nginx" > /dev/nullthenecho "nginx is running"elseecho "nginx is not running"systemctl stop keepalivedfi

启动nginx和keepalived服务

chmod a+x /etc/keepalived/check_nginx.shsystemctl start nginx && systemctl enable nginxsystemctl start keepalived && systemctl enable keepalived

systemctl status nginxsystemctl status keepalived

node01

hostnamectl set-hostname node01 && bash

三、初始化K8S集群

master01

kubeadm config print init-defaults > kubeadm.yaml

据我们自己的需求修改配置,比如修改 imageRepository 的值,kube-proxy 的模式为 ipvs,需要注意的是由于我们使用的containerd作为运行时,所以在初始化节点的时候需要指定cgroupDriver为systemd

完整的kubeadm.yaml文件如下

apiVersion: kubeadm.k8s.io/v1beta3bootstrapTokens:- groups:- system:bootstrappers:kubeadm:default-node-tokentoken: abcdef.0123456789abcdefttl: 24h0m0susages:- signing- authenticationkind: InitConfigurationlocalAPIEndpoint:advertiseAddress: 10.168.1.61bindPort: 6443nodeRegistration:criSocket: unix:///var/run/containerd/containerd.sockimagePullPolicy: IfNotPresenttaints: null---apiServer:timeoutForControlPlane: 4m0sapiVersion: kubeadm.k8s.io/v1beta3certificatesDir: /etc/kubernetes/pkiclusterName: kubernetescontrollerManager: {}dns: {}etcd:local:dataDir: /var/lib/etcdimageRepository: registry.cn-hangzhou.aliyuncs.com/google_containerskind: ClusterConfigurationkubernetesVersion: 1.26.3controlPlaneEndpoint: 10.168.1.60:16443networking:dnsDomain: cluster.localserviceSubnet: 10.96.0.0/12podSubnet: 10.244.0.0/16scheduler: {}---apiVersion: kubeproxy.config.k8s.io/v1alpha1kind: KubeProxyConfigurationmode: ipvs---apiVersion: kubelet.config.k8s.io/v1beta1kind: KubeletConfigurationcgroupDriver: systemd

kubeadm init --config=kubeadm001.yaml --ignore-preflight-errors=SystemVerification

mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/config

把master02加入集群

master02创建相关目录

mkdir -p /etc/kubernetes/pki/mkdir -p /etc/kubernetes/pki/etcd/

master01执行拷贝

scp /etc/kubernetes/pki/ca.crt master02:/etc/kubernetes/pki/scp /etc/kubernetes/pki/ca.key master02:/etc/kubernetes/pki/scp /etc/kubernetes/pki/sa.key master02:/etc/kubernetes/pki/scp /etc/kubernetes/pki/sa.pub master02:/etc/kubernetes/pki/scp /etc/kubernetes/pki/front-proxy-ca.crt master02:/etc/kubernetes/pki/scp /etc/kubernetes/pki/front-proxy-ca.key master02:/etc/kubernetes/pki/scp /etc/kubernetes/pki/etcd/ca.crt master02:/etc/kubernetes/pki/etcd/scp /etc/kubernetes/pki/etcd/ca.key master02:/etc/kubernetes/pki/etcd/

在master1上查看加入节点的命令:

kubeadm token create --print-join-command

master02执行

kubeadm join 10.168.1.60:16443 --token abcdef.0123456789abcdef \--discovery-token-ca-cert-hash sha256:7e7f1e14d31f6b395b5301a41e84ef01c47685897d7ede57eef2fd827b681f9b \--control-plane

把master03加入集群

master03创建相关目录

mkdir -p /etc/kubernetes/pki/mkdir -p /etc/kubernetes/pki/etcd/

master01执行拷贝

scp /etc/kubernetes/pki/ca.crt master03:/etc/kubernetes/pki/scp /etc/kubernetes/pki/ca.key master03:/etc/kubernetes/pki/scp /etc/kubernetes/pki/sa.key master03:/etc/kubernetes/pki/scp /etc/kubernetes/pki/sa.pub master03:/etc/kubernetes/pki/scp /etc/kubernetes/pki/front-proxy-ca.crt master03:/etc/kubernetes/pki/scp /etc/kubernetes/pki/front-proxy-ca.key master03:/etc/kubernetes/pki/scp /etc/kubernetes/pki/etcd/ca.crt master03:/etc/kubernetes/pki/etcd/scp /etc/kubernetes/pki/etcd/ca.key master03:/etc/kubernetes/pki/etcd/

在master1上查看加入节点的命令:

kubeadm token create --print-join-command

master03执行

kubeadm join 10.168.1.60:16443 --token abcdef.0123456789abcdef \--discovery-token-ca-cert-hash sha256:7e7f1e14d31f6b395b5301a41e84ef01c47685897d7ede57eef2fd827b681f9b \--control-plane

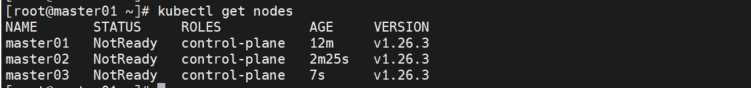

任一节点查看集群情况

扩容k8s集群-添加第一个工作节点

在node01上执行

kubeadm join 10.168.1.60:16443 --token abcdef.0123456789abcdef \--discovery-token-ca-cert-hash sha256:7e7f1e14d31f6b395b5301a41e84ef01c47685897d7ede57eef2fd827b681f9b

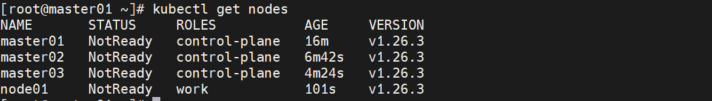

查看所有集群情况

对node节点打上worke标签

kubectl label nodes node01 node-role.kubernetes.io/work=work

再次查看

四、安装calico(只在master01操作就可以了)

yum install wgetwget https://get.helm.sh/helm-v3.10.3-linux-amd64.tar.gztar -zxvf helm-v3.10.3-linux-amd64.tar.gzmv linux-amd64/helm /usr/local/bin/

wget https://github.com/projectcalico/calico/releases/download/v3.24.5/tigera-operator-v3.24.5.tgzhelm show values tigera-operator-v3.24.5.tgz >values.yaml

修改apiServer下的true为false

apiServer:enabled: false

完整的文件如下

imagePullSecrets: {}installation:enabled: truekubernetesProvider: ""apiServer:enabled: falsecerts:node:key:cert:commonName:typha:key:cert:commonName:caBundle:# Resource requests and limits for the tigera/operator pod.resources: {}# Tolerations for the tigera/operator pod.tolerations:- effect: NoExecuteoperator: Exists- effect: NoScheduleoperator: Exists# NodeSelector for the tigera/operator pod.nodeSelector:kubernetes.io/os: linux# Custom annotations for the tigera/operator pod.podAnnotations: {}# Custom labels for the tigera/operator pod.podLabels: {}# Image and registry configuration for the tigera/operator pod.tigeraOperator:image: tigera/operatorversion: v1.28.5registry: quay.iocalicoctl:image: docker.io/calico/ctltag: v3.24.5

执行helm安装calico

helm install calico tigera-operator-v3.24.5.tgz -n kube-system --create-namespace -f values.yaml

查看pod运行情况,没有报错表示安装成功了

再次查看集群是否rady

文档更新时间: 2023-03-25 13:25 作者:admin